Flexibility vs. Interpretability

Introduction

Here we explore the difference between highly flexible and highly interpretable modeling techniques. Flexible modeling techniques are able to accomodate for very complex datasets. Interpretable modeling techniques have outputs which are easily explainable. We will dig into the difference between them, as well as various modeling techniques which lean one way or the other.

Developing Intuition

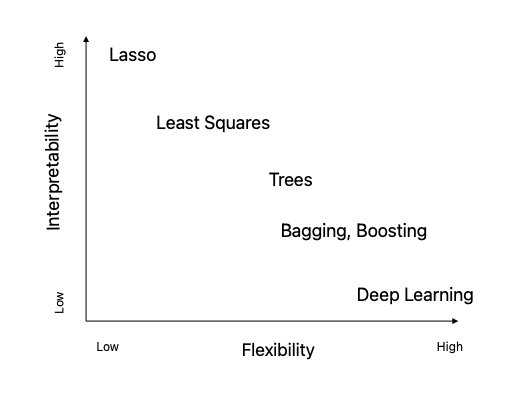

The graph above shows a handful of modeling techniques and how they differ in flexibility vs. interpretability. But why does flexibility or interpretability matter? And why do they seemingly contrast?

Flexibility is important because often we have data that is highly convoluted and complex. You are certainly aware of the immense amounts of data that is out there, most of it unstructured. Having models which can handle more or less any dataset is an advantage, because if we can process vast amounts of data at scale, rather than having to curate datasets carefully, we could feed all the data into one model with a specified architecture to return our desired output.

Interpretability is important because knowing you can predict or infer properties is fascinating and useful, but being able to show which dimension/predictor/feature is responsible for the output is far more important. For instance, if we have 1000 variables on car consumers, and we can predict when a consumer will buy a car, down to the month, but we don’t know which of these variables really matters most, then do we really understand why consumers purchase cars when they do?

Commonly, there is a notion of a "black box" with regards to highly flexible models. We can get them to work, but we don’t know how they work. In some cases, this is good enough; I don’t care how it predicts that the image is a cat, I just want it to classify between cats and dogs. But in other cases, say in healthcare studies, we do want to understand why our models are telling us this person will get disease X, and this person won’t.

Regression As An Example of Interpretability

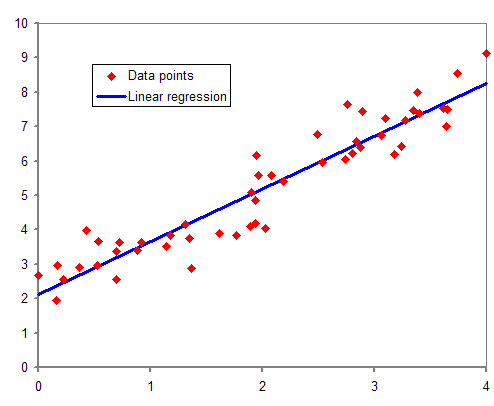

Although regression is not the most interpretable modeling technique, it is fairly so, and incredibly common. The key question to ask when searching for interpretability is: is the output easily explainable in the context of the data? This isn’t a binary, yes/no determination; often there are blurry areas. See an example of a relatively interpretable model below.

The output, our blue line, is pretty clear. We know its the line that is closest to all of the points per SSE (Sum of Squares of Errors), and when we visually look at it we can see why. The output here is obvious in context of the data.

Neural Networks As An Example of Flexibility

Our regression model above works well for that data. But what happens when we have audio, video, and text data that we want to combine to predict when an event will occur? While it might be possible to do that with regression, it would require such an immense amount of preprocessing that more flexible models like neural networks might be preferred. Plus, what do we care about why the event occurs? We just want to know when/if it will.

But once we’ve trained our neural network… just what exactly is it picking up on? How is it able to predict the event with 93% accuracy? And what is it detecting in the other 7% of cases that throws it off? It’s not entirely clear, and even if we looked at all the weights, bias etc in a neural network model, we would still walk away scratching our heads.

| Although this is in general true of neural networks, there has been some headway in making convolutional neural networks show precisely what visual features they are picking up on for instance. See Chapter 5.4 in Deep Learning with Python by Francois Chollet. |

When Should I Prefer Flexibility or Interpretability?

When we are interested in predictability, we should probably choose flexibility. When we want to infer, interpretability might be a better choice. Visit the Prediction vs. Inference Starter Guide page to learn more about that choice.